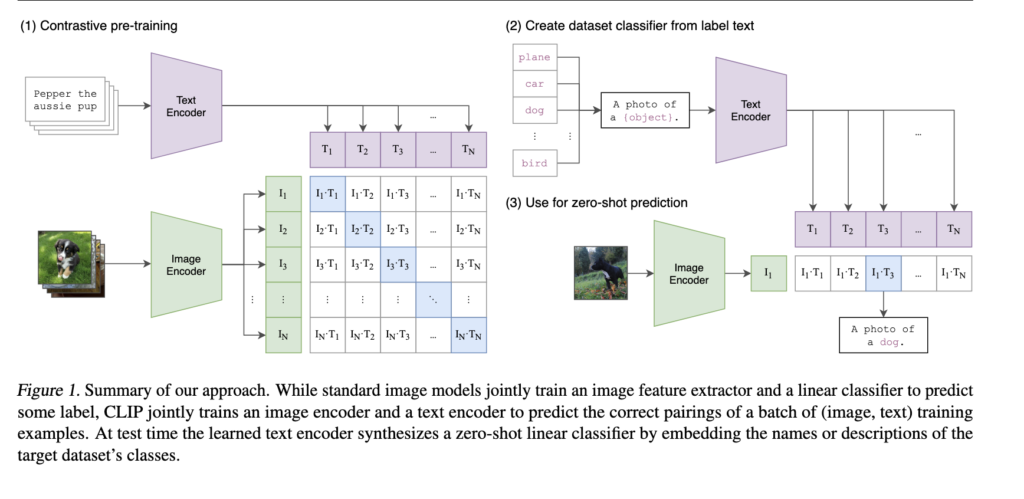

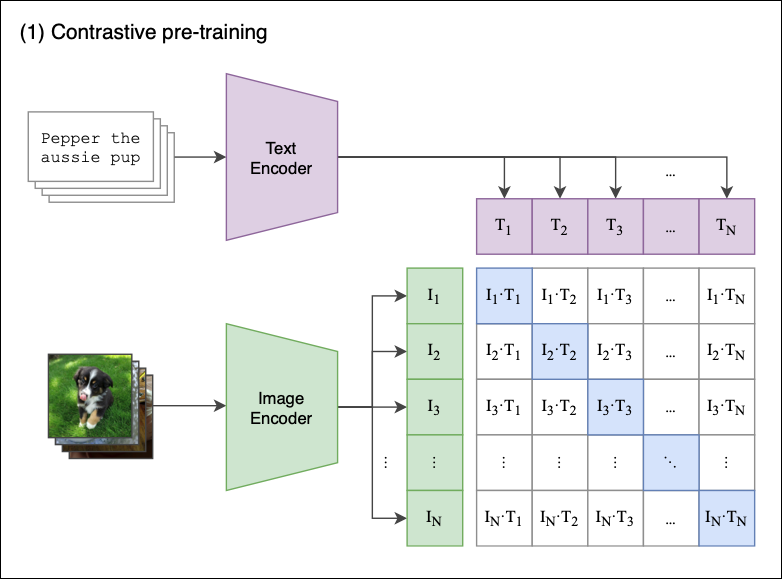

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

Natural language supervision with a large and diverse dataset builds better models of human high-level visual cortex | bioRxiv

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

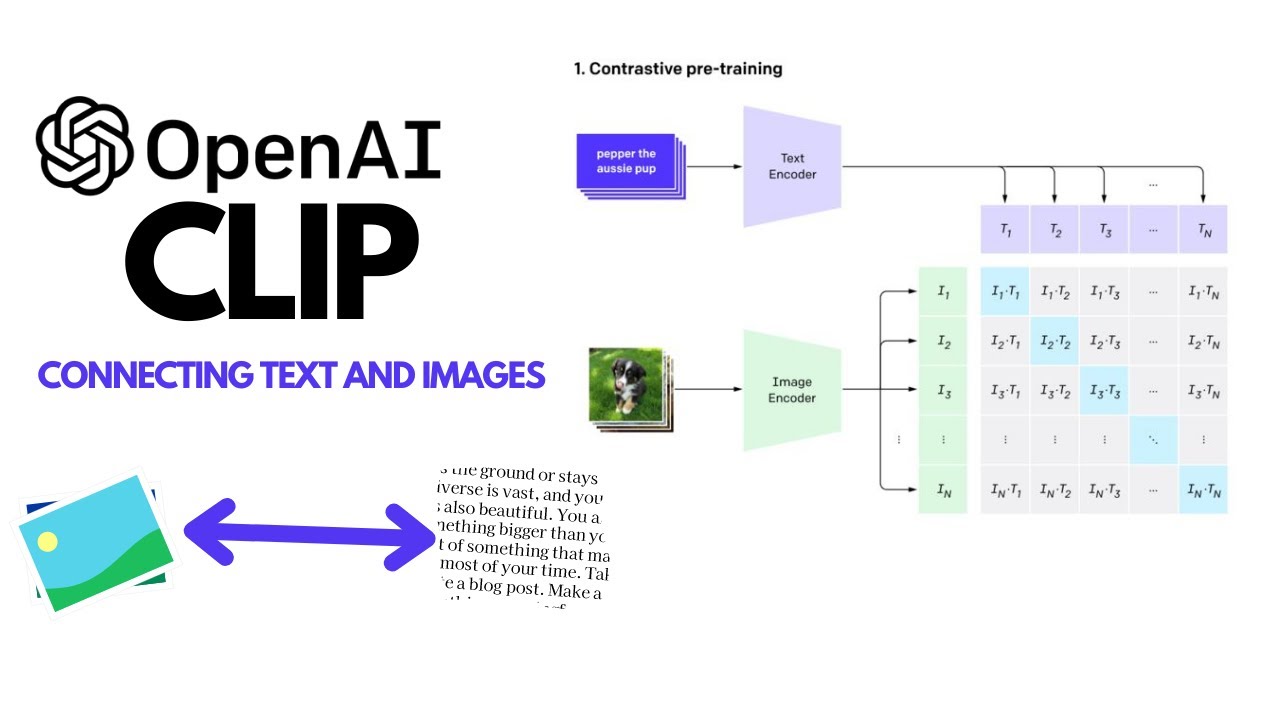

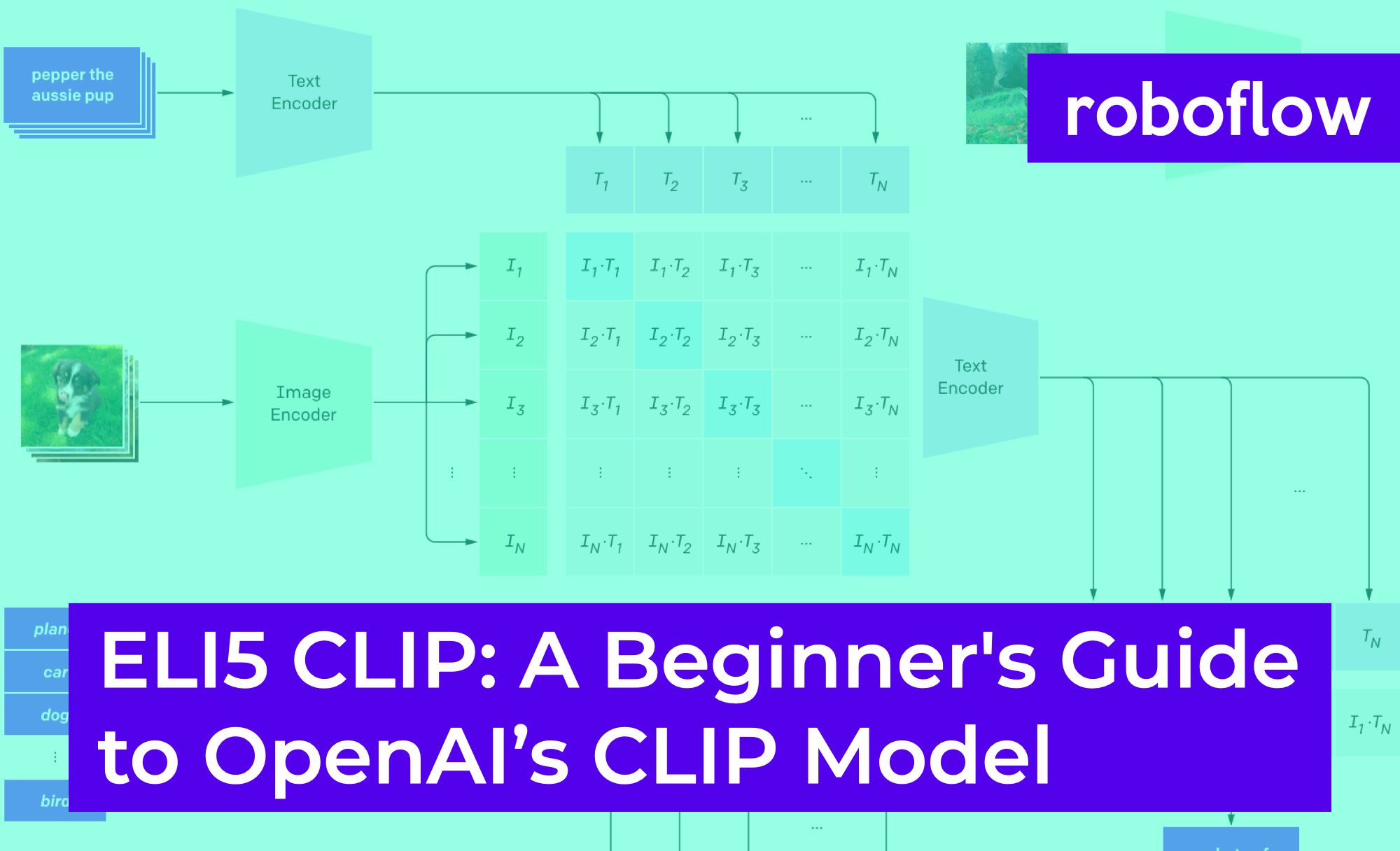

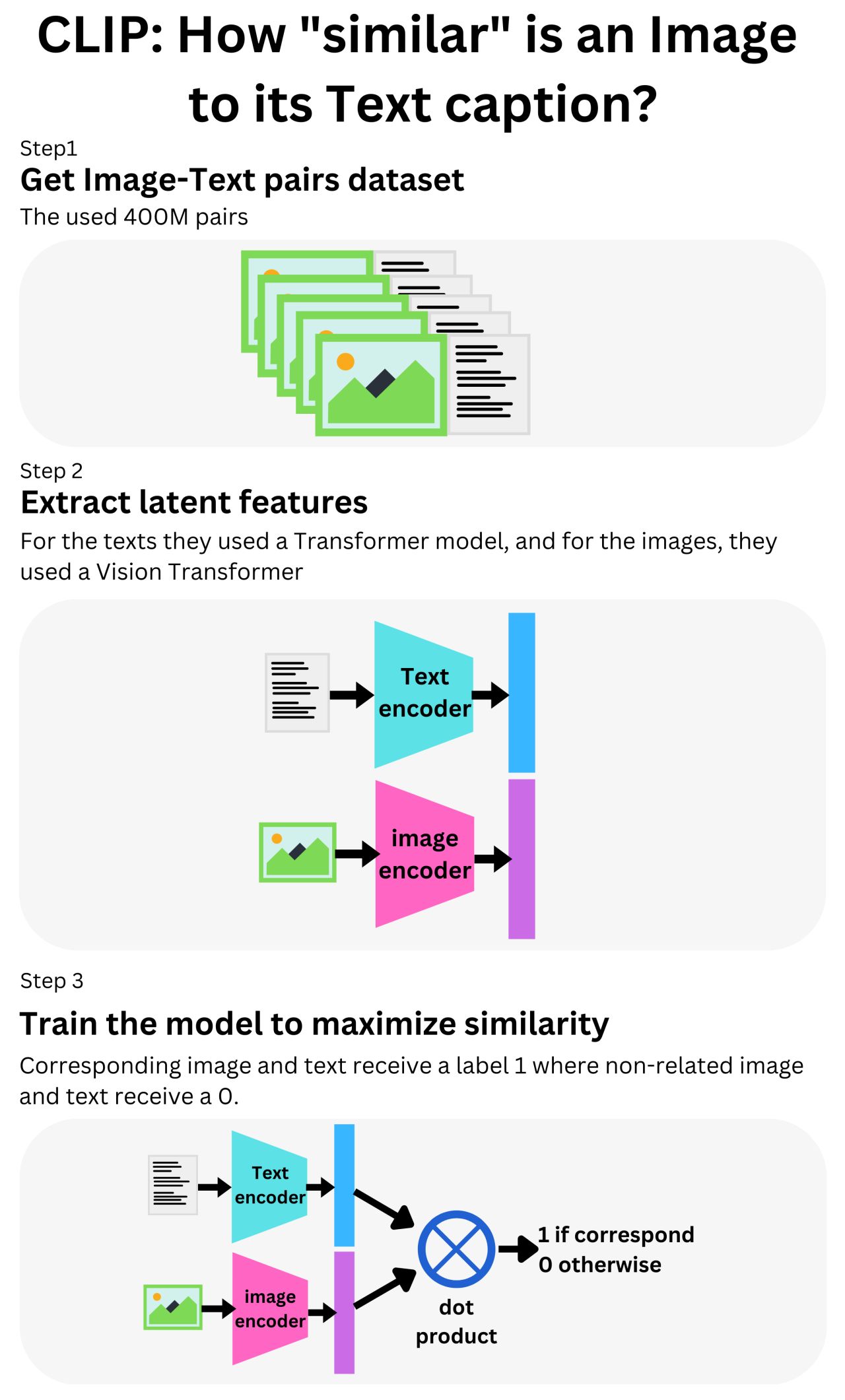

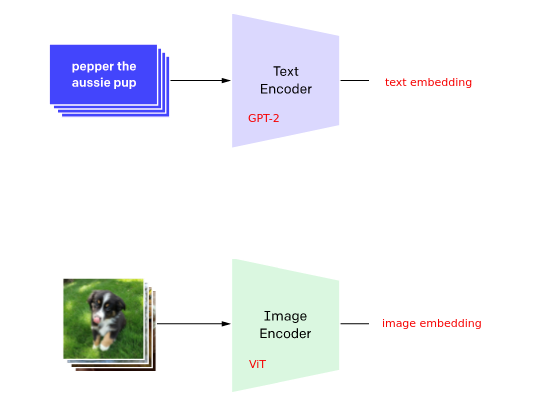

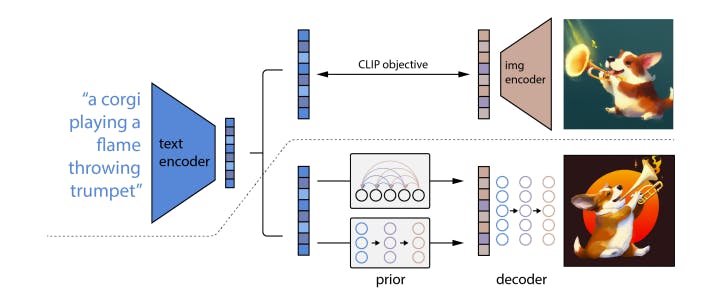

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium